The Institute for Systems Integrity (ISI)

examines how decisions fail under pressure — and what governance, design, and accountability are required to protect people, institutions, and public trust.

Current Publication

Digital Transition Risk: Why Non-Tech Boards Inherit Tech-Grade Exposure

Digital transformation is often framed as an operational or technological upgrade. This paper examines a less discussed reality: how digital dependency fundamentally reshapes enterprise risk. As organisations adopt cloud systems, electronic records, vendor-managed infrastructure, and AI-enabled tools, boards inherit technology-grade exposure irrespective of industry classification.

Featured Publication

Decision-Making Under System Stress

Foundation Article#1

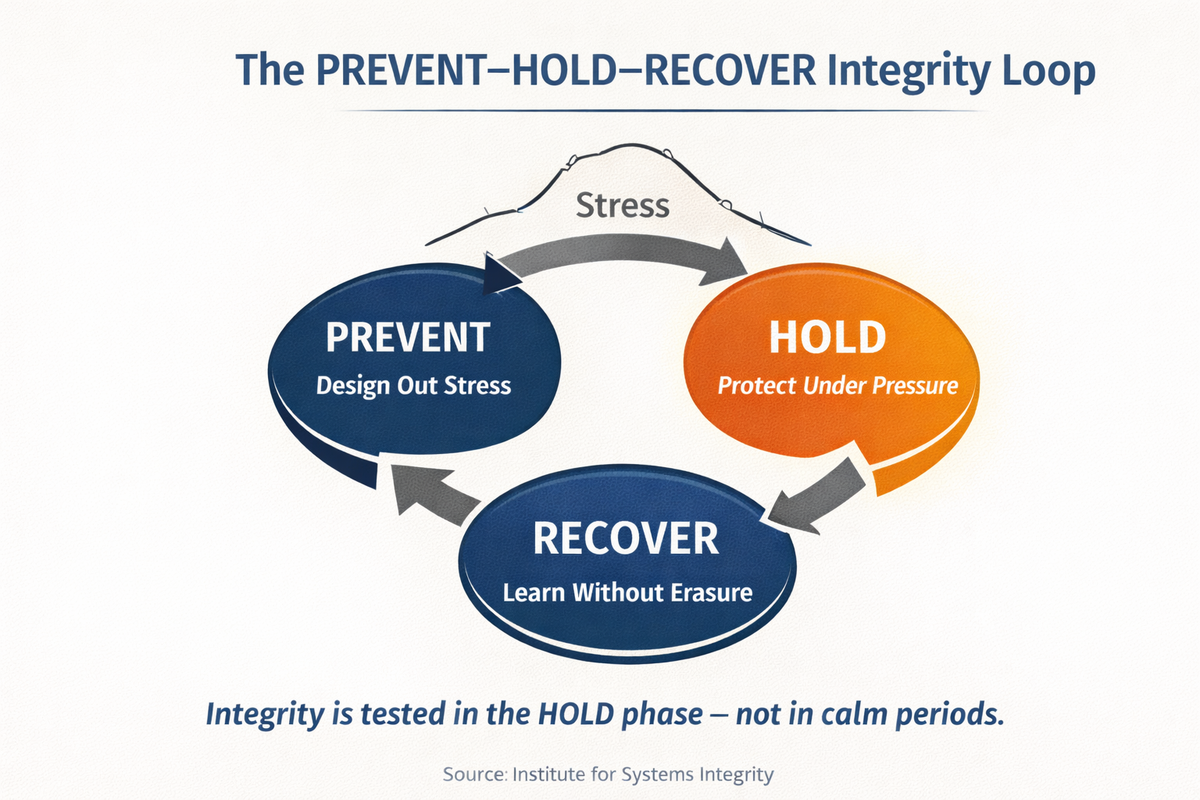

Why capable, ethical people make weaker decisions under pressure — and what integrity requires of the systems that govern them.

Most serious failures do not begin with bad decisions.

They begin with stressed systems.

This foundational paper examines how sustained pressure constrains time, attention, and information, producing predictable degradation in decision-making, even among highly capable professionals.

Latest Publication

Mentoring as Infrastructure: Learning, Power, and Risk in Organisational Design

Mentoring is widely treated as goodwill.

In practice, it behaves like infrastructure.

When designed well, it accelerates learning and strengthens judgment. When left to intention alone, it can narrow thinking, create dependency, obscure power, and amplify risk. This paper reframes mentoring as a learning control system, outlining benefits, predictable failure modes, and the safeguards required to protect judgment, independence, and decision quality.

The Residual Risk Budget: Why “Net Zero” Still Requires Governance

Net zero is often described as a destination — emissions reduced, offsets applied, balance achieved. But this framing can obscure a critical governance reality. Even under credible net-zero pathways, residual emissions, residual harms, and residual uncertainties remain. They do not disappear; they are redistributed across systems, stakeholders, and time. This paper introduces the concept of the Residual Risk Budget — the remaining exposure that must be made visible, owned, and adaptively governed. Without this discipline, net zero risks become an accounting construct that masks ethical trade-offs and accelerates integrity drift.

Beyond Legality: Why Boards Must Ask “Should We?”

In contemporary governance, legality is often treated as the primary decision threshold. Yet many organisational failures arise not from illegal actions, but from decisions that were lawful, compliant, and ultimately indefensible. This ISI paper examines the critical distinction between “Can we?” and “Should we?”, arguing that resilient boards must govern beyond permission alone and embed integrity as a core decision discipline.

Governing Wicked Problems in Healthcare: An Integrity Architecture for AI, Sustainability, and Net Zero

Healthcare systems are entering a period of unprecedented complexity. Artificial intelligence, sustainability pressures, and net zero commitments are converging within institutions not originally designed to absorb this pace and scale of change. This paper argues that these challenges are best understood not as technical or compliance problems, but as wicked problems requiring a fundamentally different governance response.

Governing AI in Healthcare: A Practical Integrity Architecture

This paper sets out why AI governance most often fails after deployment, not at approval. In real clinical environments, performance, safety, and accountability are shaped by workflow, staffing, training, and local judgment—not the model alone. This paper presents a practical integrity architecture for healthcare AI: designed to detect drift, preserve clinical judgment, and enable correction under operational pressure, before harm becomes visible to patients or boards.

Beyond AI Compliance: Designing Integrity at Scale

This paper examines why most AI failures do not begin with flawed technology, but with governance systems that prioritise reassurance over judgment. As AI accelerates decision-making across complex organisations, traditional compliance frameworks struggle to detect drift, surface doubt, or correct harm before it becomes visible. This paper sets out a systems-level approach to AI governance—one that treats integrity as an architectural property, designed deliberately into authority, accountability, and the capacity to pause under pressure.

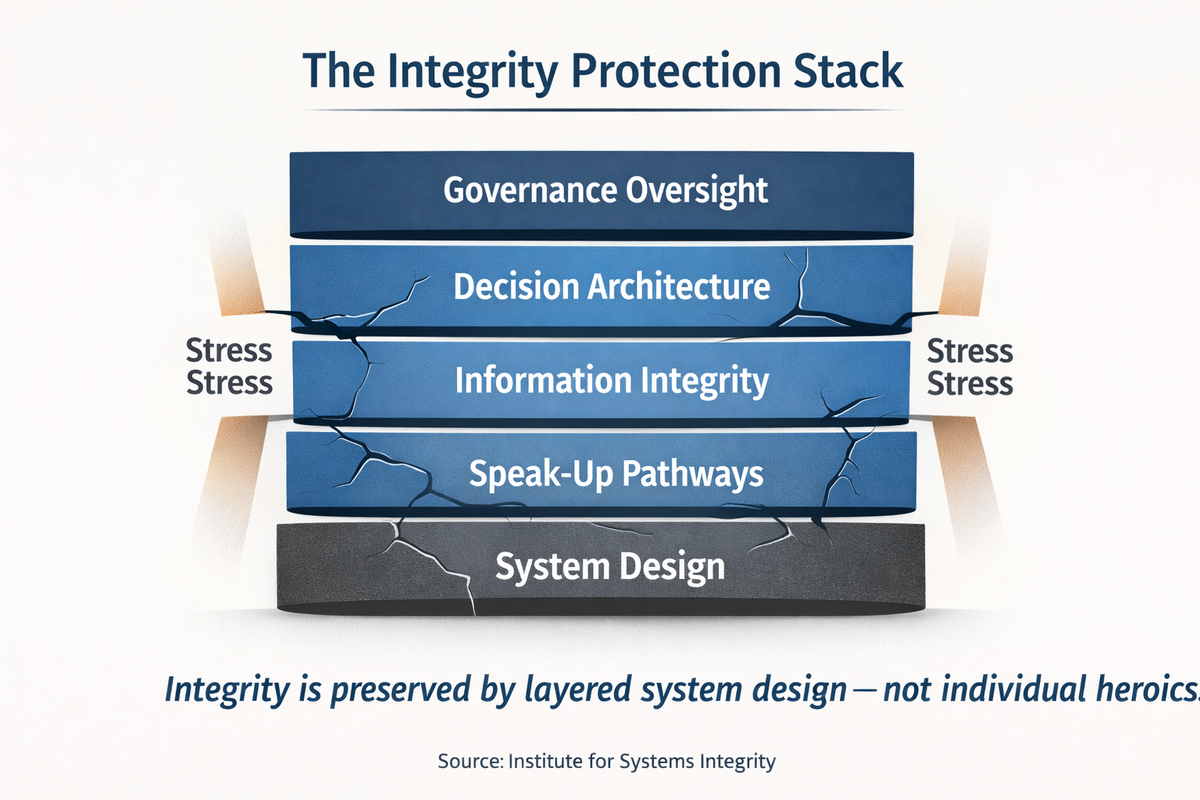

The Systems Integrity Toolkit — Phase I

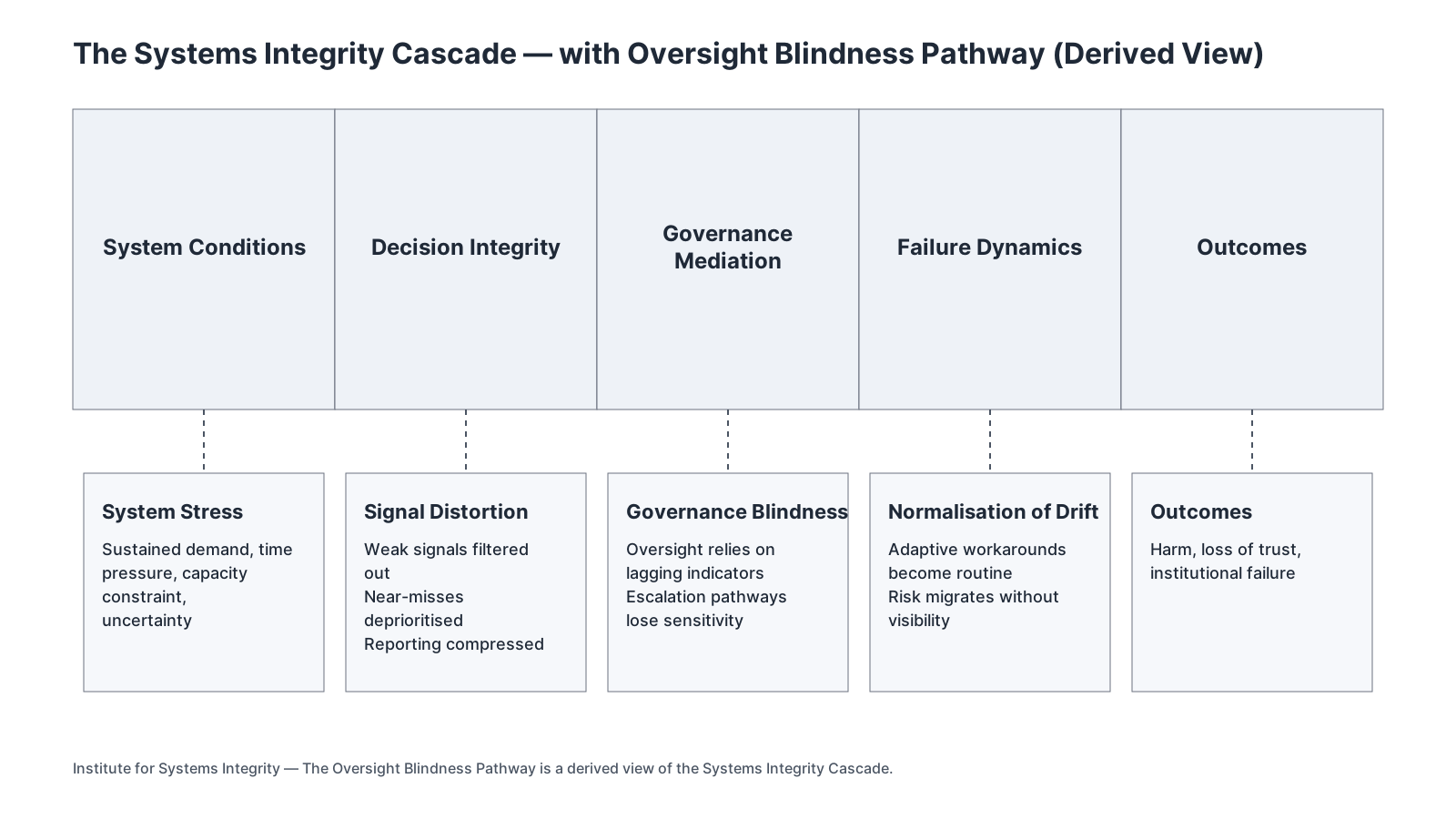

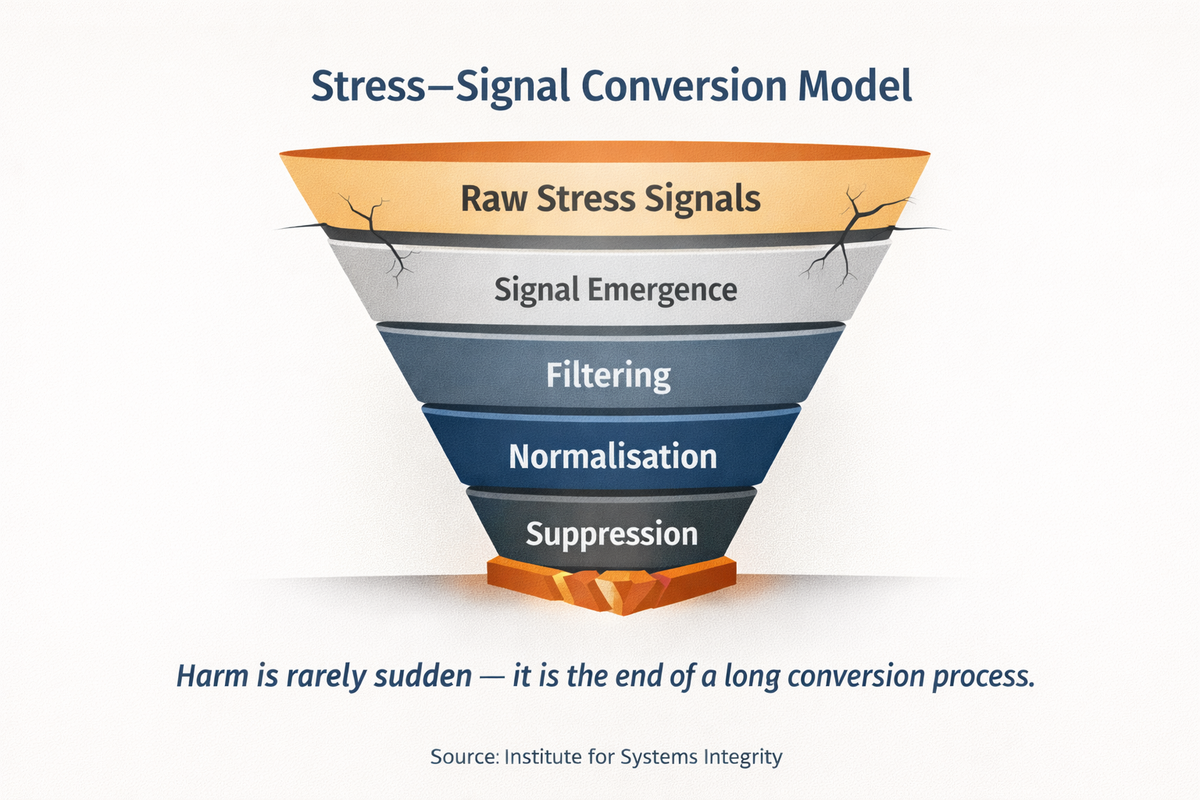

Why most integrity failures are not visible in time — and how systems allow harm to accumulate before anyone intervenes

Foundation Toolkit #1

This paper introduces the Systems Integrity Toolkit — Phase I, a governance architecture that consolidates ISI’s foundational research into a practical framework for identifying integrity risk before outcomes harden, showing how system stress, decision degradation, governance mediation, and failure dynamics interact long before harm becomes visible.

Most systems don’t fail because they can’t see the problem.

They fail because they can’t change the things they’ve learned to protect.

As a companion to the Systems Integrity Toolkit — Phase I, this paper examines why integrity risks persist even after they become visible. It explores systemic refusal — the quiet protection of certain variables from change — and shows how governance under pressure can stabilise harm rather than correct it. Together, the Toolkit and this analysis describe a familiar condition in complex organisations: clarity without permission to change.

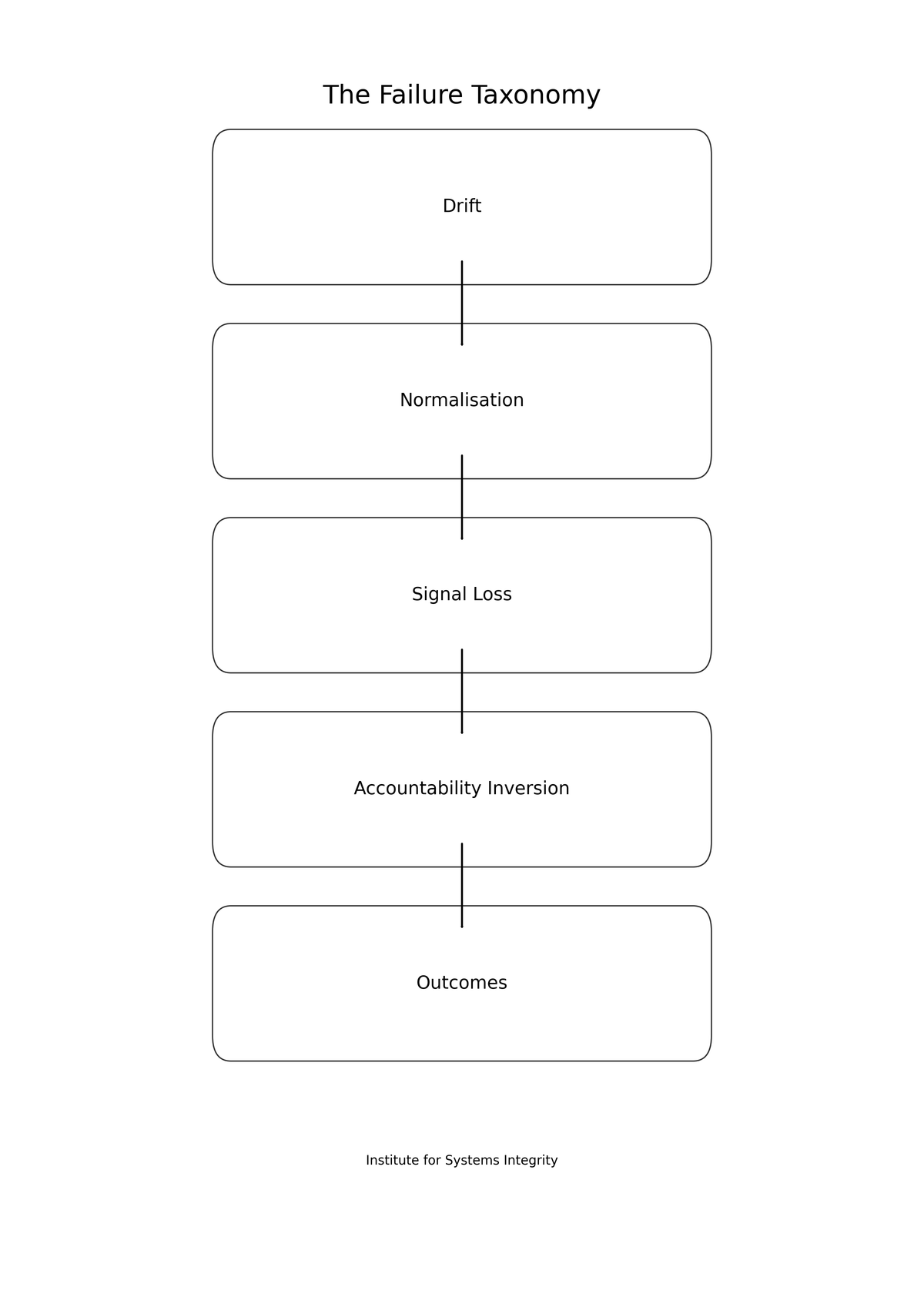

The Failure Taxonomy: How Harm Emerges Without Malice - Why most disasters are not caused by bad people — but by predictable system drift

Foundation Article#4

This paper introduces the Failure Taxonomy — a structural model showing how harm accumulates in complex systems through drift, signal loss, and accountability inversion, without anyone intending it.

Companion to Foundation Article#4

The ISI Pause Principle explains why governance fails when reaction replaces reflection. Under pressure, systems that remove space between signal and response degrade judgment, suppress warning signs, and invert accountability. Pause is not a leadership trait — it is a governance control condition.

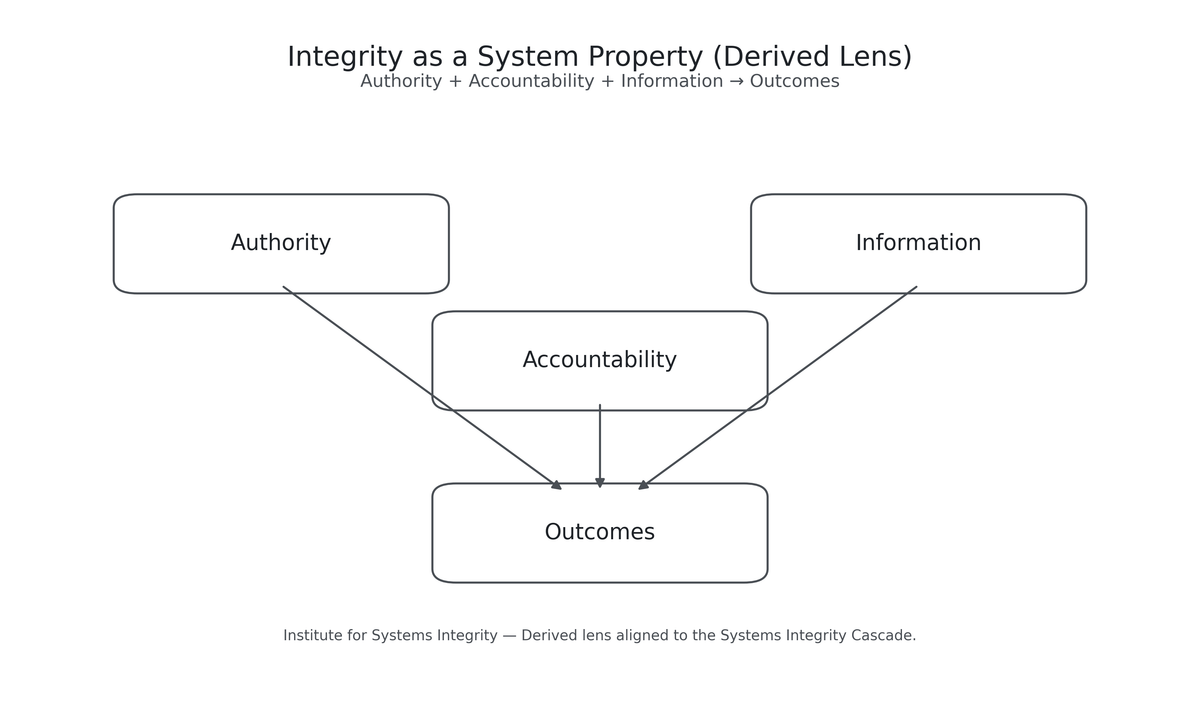

Integrity is a System Property. Why outcomes reflect design, not intent

Foundation Article#3

Integrity is often treated as a personal trait. This paper shows why it is better understood as a system property — shaped by how authority, accountability, and information are aligned under stress, and why outcomes reflect design rather than intent.

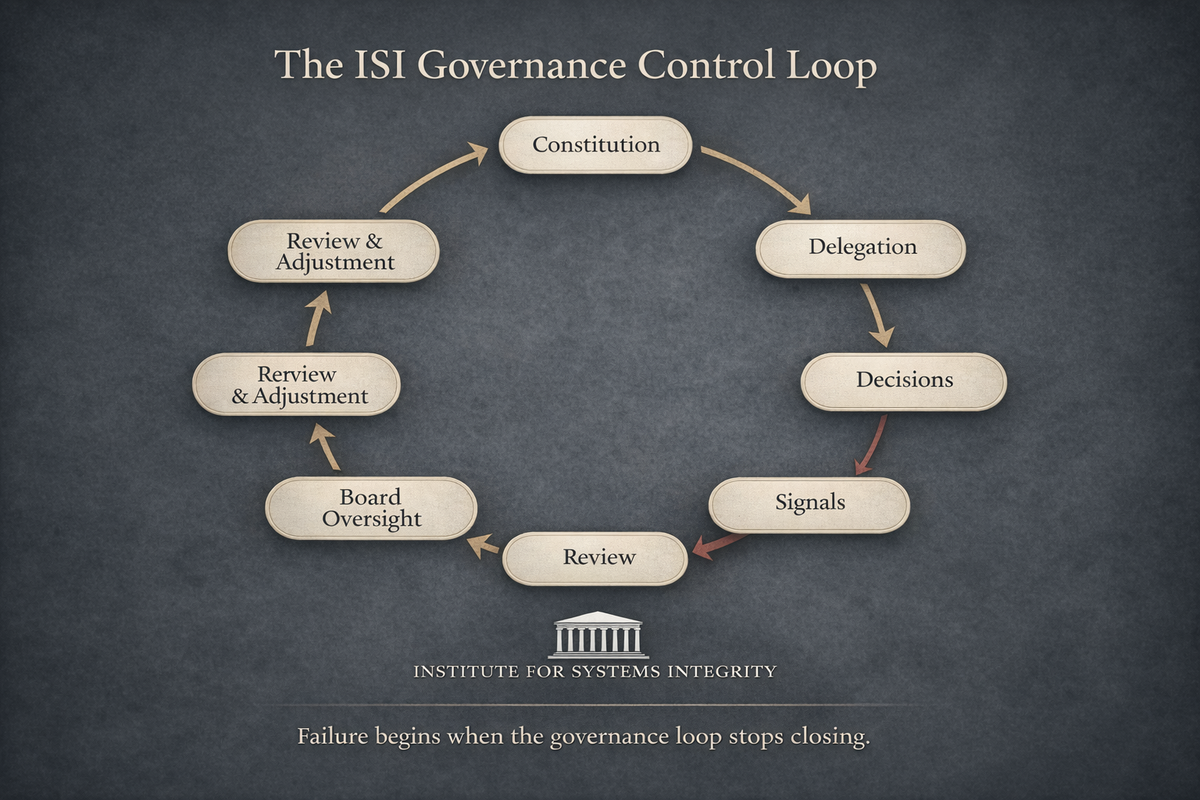

When the Constitution Becomes a Weapon

How governance drift turns compliance into a liability under system stress

Companion to Foundation Article#3

This paper examines how constitutions, delegations, and oversight structures can remain legally intact while drifting out of alignment with real decision-making, allowing compliance to persist even as governance control erodes.

Why Oversight Fails Under Pressure

Foundation Article#2

How system stress distorts visibility, weakens governance, and produces predictable outcomes

Governance mechanisms designed for stable conditions often lose sensitivity under sustained stress.

Signals distort. Drift normalises. Oversight becomes selectively blind.

This paper examines why failures emerge quietly — and why outcomes are best understood as properties of system design, not individual intent.

When Resilience Appears, Governance Has Already Failed. Why frontline heroics are a warning signal — not a success story

Companion to Foundation Article#2.

Frameworks

About the Institute (ISI)

Institute for Systems Integrity (ISI)

The Institute for Systems Integrity is an independent research and analysis initiative examining how complex systems fail under stress — and how integrity erodes across institutions even in the absence of malice or incompetence.

The Institute focuses on decision-making, governance, leadership, and accountability within high-stakes environments, including healthcare, technology, cyber security, sustainability, and business management.

Its work is analytical rather than advisory, and is intended to support boards, executives, policymakers, clinicians, and researchers in understanding systemic risk, institutional drift, and delayed harm.

The Institute operates independently and does not provide consulting or commercial services.

The Institute publishes deliberately and in phases. Additional papers will be added to this series over time.

© 2026 Institute for Systems Integrity. All rights reserved.

Content may be quoted or referenced with attribution.

Commercial reproduction requires written permission.